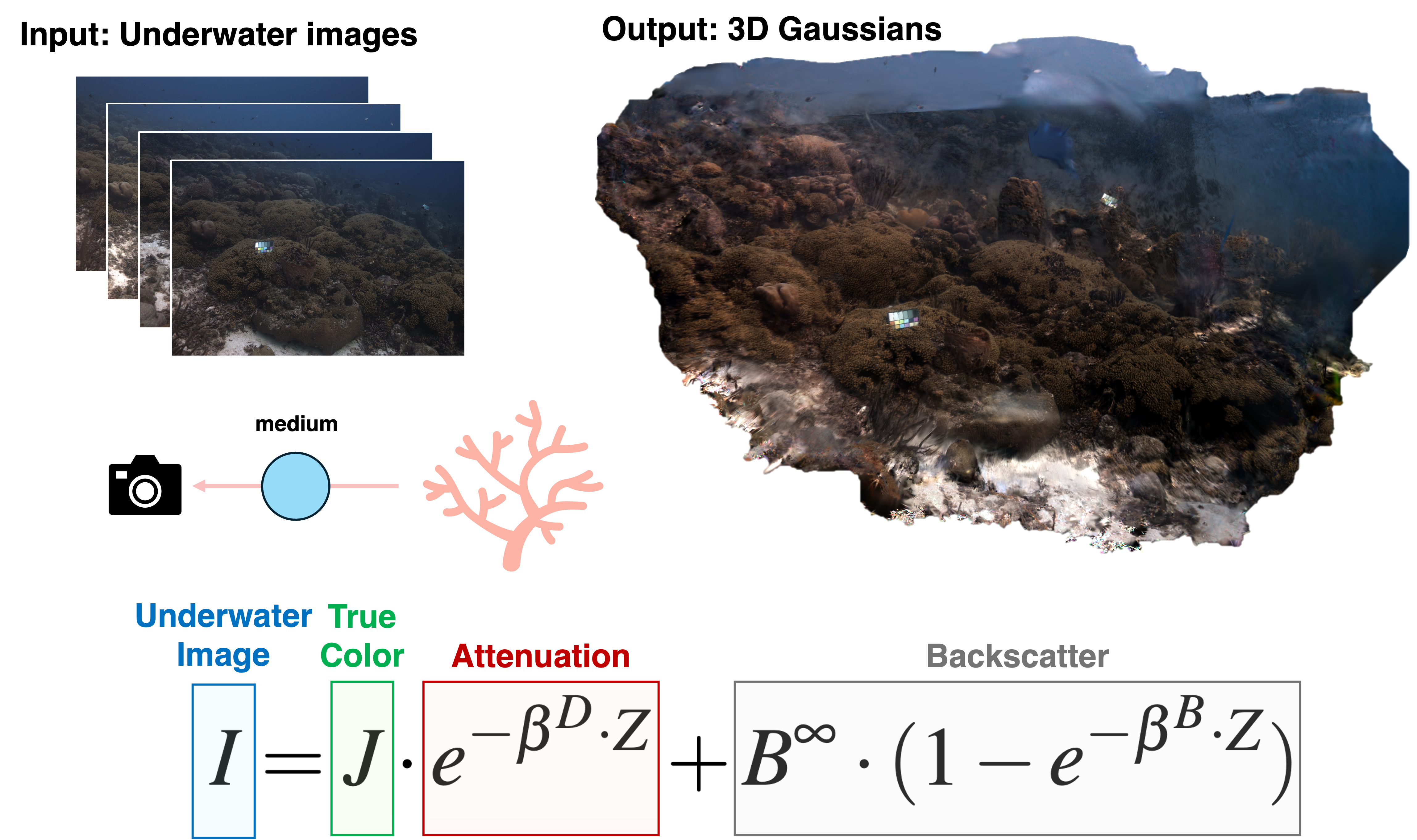

We introduce SeaSplat, a method to enable real-time rendering of underwater scenes leveraging recent advances in 3D radiance fields. Underwater scenes are challenging visual environments, as rendering through a medium such as water introduces both range and color dependent effects on image capture. We constrain 3D Gaussian Splatting (3DGS), a recent advance in radiance fields enabling rapid training and real-time rendering of full 3D scenes, with a physically grounded underwater image formation model. Applying SeaSplat to the real-world scenes from SeaThru-NeRF dataset, a scene collected by an underwater vehicle in the US Virgin Islands, and simulation-degraded real-world scenes, not only do we see increased quantitative performance on rendering novel viewpoints from the scene with the medium present, but are also able to recover the underlying true color of the scene and restore renders to be without the presence of the intervening medium. We show that the underwater image formation helps learn scene structure, with better depth maps, as well as show that our improvements maintain the significant computational improvements afforded by leveraging a 3D Gaussian representation.

Here we highlight the ability of SeaSplat to render novel views while removing the medium and restoring the true color of the underlying scene.

Here we display side-by-side videos comparing 3D Gaussian Splatting and SeaSplat in rendering underwater scenes with the medium present.

@inproceedings{yang2025seasplat,

author = {Yang, Daniel and Leonard, John J. and Girdhar, Yogesh},

title = {SeaSplat: Representing Underwater Scenes with 3D Gaussian Splatting and a Physically Grounded Image Formation Model},

booktitle = {2025 IEEE International Conference on Robotics and Automation (ICRA)},

year = {2025},

}